Hypercritical

Hypercritical

As far back as I can remember, I was told I would grow up to be an artist. By age six, my obsessively detailed renderings of Mechagodzilla, et al. were already drawing attention from adults. By the time I was eight years old, my parents had been persuaded by teachers and friends to enroll me in private art lessons.

I recall the informal “admissions test” with my first art instructor. A scale model of a bull was placed in front of me on a table and I was asked to draw it. The plastic bull was a faithful reproduction, full of muscles and knobby joints. It was an ugly, forlorn thing, far removed from my normal subject matter. After a few minutes, the resulting drawing was roughly in proportion, the details were well represented, and the perspective was pretty close. I was in.

Thus began eight years of regular art instruction. I progressed from pencils and pastels to watercolors and acrylics, and finally to oils. The content was mostly classical: lots of still lifes and landscapes. Meanwhile, back at home, I slowly covered my bedroom walls (and some of the ceiling) with colored-pencil and chalk-pastel recreations of Larry Elmore and Keith Parkinson book covers, fantasy calendar art, and as much imported Japanese animation as I could get my pre-Internet-suburban-child’s hands on.

I enjoyed both the process and the results. But long before my art lessons stopped around age sixteen, I knew I would never be a professional artist. Partly, this was just a milder incarnation of other children’s realizations that they would never be, say, Major League Baseball players. But the real turning point for me came with the onset of puberty and its accompanying compulsive self-analysis. I realized that I owed what success I had as an artist not to any specific art-related aptitude, but rather to a more general and completely orthogonal skill.

Drawing what you actually see—that is, drawing the plastic bull that’s in front of you rather than the simplified, idealized image of a bull that’s in your head—is something that does not come naturally to most people, let alone children. At its root, my gift was not the ability to draw what I saw. Rather, it was the ability to look at what I had drawn thus far and understand what was wrong with it.

While other children were satisfied with their loosely connected conglomerations of orbs and sticks, I saw something that bore little resemblance to its subject. And so, in my own work, I attempted to make the necessary corrections. When that failed, as it inevitably did, I started over. Again and again and again, each time making minor improvements, but all the while still seeing all the many ways that I had failed to persuade my body to produce the correct line or apply the appropriate coloring.

By my early teens, the truth of it was staring at me from the walls of my room, covered not with original artwork but with slavish reproductions of other works. Copying completed two-dimensional images played perfectly to my actual strengths. It was a trick. All that praise for my work and all those expectations for my future career in art were simply misattributions of my talent.

I was like Wolverine, whose superpower is not his nigh-indestructible skeleton or super-sharp metal claws, but rather his body’s ability to heal, which made his surgical augmentation possible, and which allows those claws to repeatedly pierce his hands without causing permanent injury.

If ignorance is bliss…

This acute awareness of deficiencies colors all my memories of childhood. Toys, in particular, were a focal point of dissatisfaction. I didn’t understand why toy manufacturers couldn’t see the countless ways that their products differed from the on-screen characters, machinery, or structures that they were based on. Transforming toys were the biggest offenders, as it was often physically impossible for all configurations to look correct. (I would have been satisfied with just one, but even that rarely happened.)

But my scrutiny was not limited to my own artwork or the products of multinational conglomerates. Oh no, it extended to everything I encountered. This pasta is slightly over-cooked. The top of that door frame is not level. Some paint from that wall got onto the ceiling. Text displayed in 9-point Monaco exhibits a recurring one-pixel spacing anomaly in this operating system. Ahem.

Now, at this point, it’s reasonable to ask, “Have you considered the possibility that you’re just an excessively critical jerk?” I can tell you that, over the years, I have dwelled quite a bit on my…“peculiar predisposition,” let’s call it.

The drawbacks are obvious. Knowing what’s wrong with something (or thinking that you do, which, for the purposes of this discussion, should be considered the same thing) does a fat lot of good if you lack the skills to correct it. And thinking that you know what’s wrong with everything requires significant impulse control if you want to avoid pissing off everyone you meet.

But much worse than that, it means that everything you ever create appears to you as an accumulation of defeats. “Here’s where I gave up trying to get that part right and moved on to the next part.” Because at every turn, it’s apparent to you exactly how poorly executed your work-in-progress is, and how far short it will inevitably fall when completed. But surrender you must, at each step of the process, because the alternative is to never complete anything—or to never start at all.

If you only knew the power of the dark side

Lest you think seeing the world through these eyes is some kind of curse, let me move a bit closer to the point of this heartwarming bout of navel-gazing. Yes, it’s true that a critic’s eye is useless without an artist’s hand. But an artist without a critical eye is even more ineffectual.

I’m using the terms “artist” and “critic” broadly here; this applies to any endeavor undertaken by any group of people. This opens the door for the artist and the critic to be separate people—as they most often are. The movie critic is likely incapable of making a better movie than one he’s reviewing, but his criticism, at its best, can help others think in new ways about what makes a movie great. And among these “others” are the filmmakers themselves, of course. It’s a virtuous cycle created through apparent viciousness.

In the technology industry, the interplay of creator and critic has only begun to take shape in the past few decades. Computers, in particular, have historically been measured with instruments and stopwatches rather than thoughts or—Turing forbid!—feelings.

This is sad because there are ample precedents to draw from. Take cars, for example. Like computers, they’re undeniably machines, and their objective performance characteristics are measured far and wide. But there’s also a healthy tradition of insightful and provocative automotive analysis beyond the raw numbers. What does it feel like to drive a Ferrari, and how is it different from a Viper? How successful is the styling of the Lexus LS400 at conveying the intended message of Toyota’s new luxury brand? What influence does the particular kind of plastic used on a dashboard have on overall driver satisfaction?

It also probably helps that there’s no energy wasted arguing about whether or not cars are “art.” Consider the poor video game industry, by all reasonable measures the most obvious computer-native form of art. Even it can’t catch a break. Instead of reaping the benefits of serious analysis, it’s bogged down in unending debates about its worthiness, and those who dare to talk about the problem are lambasted as pretentious kill-joys.

But computers themselves—what we used to call personal computers, now joined by phones, music players, and other devices—still seem largely exempt from this process. This, perhaps, explains why most PCs provide an unsatisfying consumer experience. Oh, sure, geeks love them, but you’ll find far fewer regular people who express any sort of devotion to their Dell or HP PCs than to even the worst of the soon-to-be-defunct American car brands.

How is it that Pontiac and Oldsmobile managed to find their way into the hearts and minds of consumers (ask your parents about it) while perfectly competent Dell and HP have not? I contend that it’s at least partly because the auto industry has enjoyed a rich history of subjective, high-quality criticism, while the technology industry has been busy measuring megahertz and counting bits.

There’s at least one exception that springs to mind, of course. Devoted customers? Subjective quality over objective specifications? Feelings? Yep, that’s Apple.

So how has Apple done it? How has it overcome the lack of a healthy ecosystem of hardware and software criticism (in the artistic sense) to produce products that succeed despite glaring deficits in nearly all empirical measures? Well, it cheated.

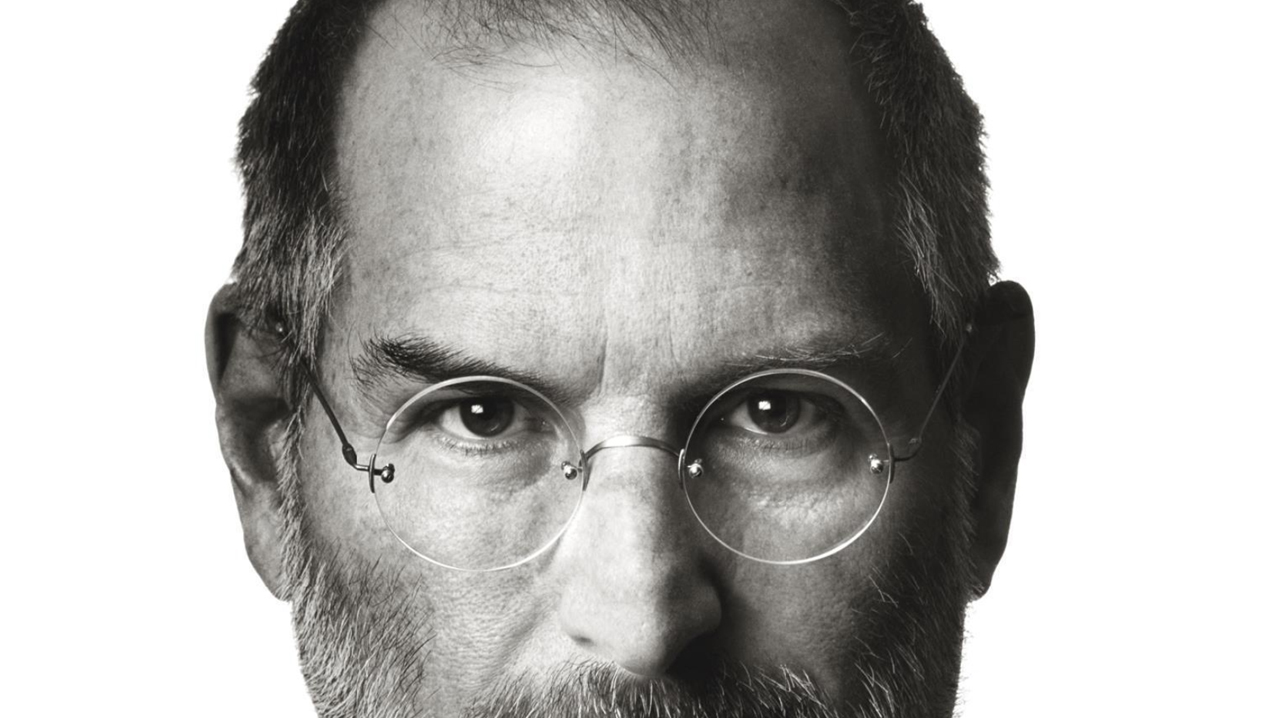

Instead of relying on external critics to create that virtuous feedback cycle, Apple leveraged some in-house talent. His name is Steven Paul Jobs. Maybe you’ve heard of him?

Steve and me

By all accounts, Steve Jobs is no engineer. He was never a programming maven like Bill, nor was he a hardware wiz like Woz. On his own, Jobs could not create much of anything. But that’s not his superpower.

Though a good critic can influence the community of creators, in the end, he does not create anything himself. But take that critic and put him in charge of the creators. Make him, let’s say, the founder, CEO, and spiritual leader of several thousand of the most talented engineers and artists in the computer industry. What might happen then?

I’ll tell you what happens: the iMac, the iPod, iTunes, Mac OS X, iLife, the iPhone. I believe Steve Jobs’s “peculiar predisposition” is not so unlike mine. (There’s a reason that "This is shit!" is one of his best known catch phrases, after all.) I further believe that, seemingly against all odds, Jobs has found a way to parlay this skill into an improbable orgy of creation. He is Apple’s übercritic: one man to pare a torrent of creativity and expertise down to a handful of truly great products by picking apart every prototype, challenging every idea, and finding the flaws that no one else can see.

Knowing what’s wrong is a prerequisite for fixing it. That may sound trite, but it’s actually one step ahead of most of the computer industry. Knowing that something is wrong is a prerequisite for figuring out what’s wrong. Most tech companies could do well to start with that.

How about knowing exactly what’s wrong and being in a position to demand that it be fixed before a product sees the light of day? That’s pretty great—when you’re right. Unfortunately, no one is infallible. The collective wisdom of a stable of independent critics would be an important moderating influence here.

And what about me? What have I parlayed my critical nature into? Well, for one thing, it let me turn some basic manual dexterity into a childhood filled with artistic creation. In my adult life, I suppose it wouldn’t be an exaggeration to describe my best-known body of work as several hundred thousand words about exactly what is wrong (and, to be fair, also what’s right) with Mac OS X. Though it may sound depressing to compulsively find fault in everything, I’ve found that the benefits definitely outweigh the drawbacks.

Practice breaks perfect

Even at the extreme end of the spectrum, I have a few kindred spirits. In fact, most geeks have this inclination to some degree, even if it’s just nitpicking logical or scientific flaws in a favorite TV show or movie. This is actually a skill worth developing. Have you ever met someone who holds strong opinions but is completely incapable of explaining them? “I really hated that book.” “Why?” “I don’t know, I just didn’t like it.” Who wants to be that guy? That’s no way to live.

Maybe you’re afraid you don’t know enough about anything to trust the validity of your criticism. Putting aside the extremely small likelihood that this is actually true, it shouldn’t stop you. Developing your critical thinking skills necessarily involves applying your critical thinking skills to…your critical thinking skills. It’s recursively self-healing!

Even if you can’t bring yourself to see any value in increasing your awareness and understanding of every little thing that’s wrong with the world, I’d venture to guess that, if you’ve read this far, you’re the kind of person who at least enjoys the results of others who practice this fine art.

Take the recent release of the Safari 4 beta, for example. The new “toppy tabs” user interface provoked some strong reactions. But the best of the best went far beyond a mere endorsement or condemnation of the changes. Simply expressing an opinion was not enough for these brave bloggers. They needed to understand why they liked or disliked Safari 4.

And true to form, there was plenty of recursive self-analysis. Do I dislike these new tabs only because they’re different? Are my old habits blinding me to the benefits of this new design? People really dove deep on this thing: generalizing, trying to find the underlying patterns, and changing their positions based on new analyses. This stuff is what makes the Mac web—and, by extension, albeit indirectly, the Mac itself—so great. At its best, it’s critics all the way down.

The upward surge of mankind

The point is, ladies and gentleman, that criticism, for lack of a better word, is good. Criticism is right. Criticism works. Criticism clarifies, cuts through, and captures the essence of the evolutionary spirit…

Okay, let me try that again. Actually, I’m on the same page as Gordon Gekko in one important respect. Like greed, criticism gets a bad rap, especially when it’s presented in large doses. It’s impolite. It’s unnecessarily obsessive. It’s just a bummer. But the truth is, precious little in life gets fixed in the absence of a good understanding of what’s wrong with it to begin with.

This character flaw, this curse, this seemingly most useless of skills is actually the yin to the more widely recognized yang of creative talent. Is a preternatural ability to find fault enough on its own to make something great? Probably not, but it can help amplify mundane competencies and produce results well beyond what you could have achieved with your creative skills alone.

No, we can’t all be Steve Jobs, but there’s room in life for both the grand and the prosaic. Every day is a new chance to do something a little bit better (“I am the Steve Jobs of this sandwich!”), to find something wrong with what you’re doing and understand it well enough to know how to fix it. If this is not your natural proclivity, you may have to work at it a bit. I think you’ll be pleased with the results…but not completely, I hope.

This article originally appeared at Ars Technica. It is reproduced here with permission.