Hypercritical

Spatial Computing

The graphical user interface on the original Macintosh was a revelation to me when I first used it at the tender age of 8 years old. Part of the magic was thanks to its use of "direct manipulation." This term was coined in the 1980s to describe the ability to control a computer without using the keyboard to explain what you wanted it to do. Instead of typing a command to move a file from one place to another, the user could just grab it and drag it to a new location.

The fact that I’m able to write the phrase “grab it and drag it to a new location” and most people will understand what I mean is a testament to the decades-long success of this kind of interface. In the context of personal computers like the Mac, we all understand what it means to “grab” something on the screen and drag it somewhere using a mouse. We understand that the little pictures represent things that have meaning to both us and the computer, and we know what it means to manipulate them in certain ways. For most of us, it has become second nature.

With the advent of the iPhone and ubiquitous touchscreen interfaces, the phrase “direct manipulation” is now used to draw a contrast between touch interfaces and Mac-style GUIs. The iPhone has “direct manipulation.” The Mac does not. On an iPhone, you literally touch the thing you want to manipulate with your actual finger—no “indirect” pointing device needed.

The magic, the attractiveness, the fundamental success of both of these forms of “direct manipulation” has a lot to do with the physical reality of our existence as human beings. The ability to reason about and manipulate objects in space is a cornerstone of our success as a species. It is an essential part of every aspect of our lives. Millions of years of natural selection has made these skills a foundational component of our very being. We need these skills to survive, and so all of us survivors are the ones who have these skills.

Compare this with the things we often put under the umbrella of “knowing how to use computers”: debugging Wi-Fi problems, understanding how formulas work in Excel, splitting a bezier curve in Illustrator, converting a color image to black and white in Photoshop, etc. These are all things we must learn how to do specifically for the purpose of using the computer. There has not been millions of years of reproductive selection to help produce a modern-day population that inherently knows how to convert a PDF into a Word document. Sure, the ability to reason and learn is in our genes, but the ability to perform any specific task on a computer is not.

Given this, interfaces that leverage the innate abilities we do have are incredibly powerful. They have lower cognitive load. They feel good. “Ease of use” was what we called it in the 1980s.

The success of the GUI was driven, in large part, by the fact that our entire lives—and the lives of all our ancestors—have prepared us with many of the skills necessary to work with interfaces where we see things and then use our hands to manipulate them. The “indirection” of the GUI—icons that represent files, windows that represent documents that scroll within their frames—fades away very quickly. The mechanical functions of interaction become second nature, allowing us to concentrate on figuring out how the heck to remove the borders on a table in Google Docs1, or whatever.

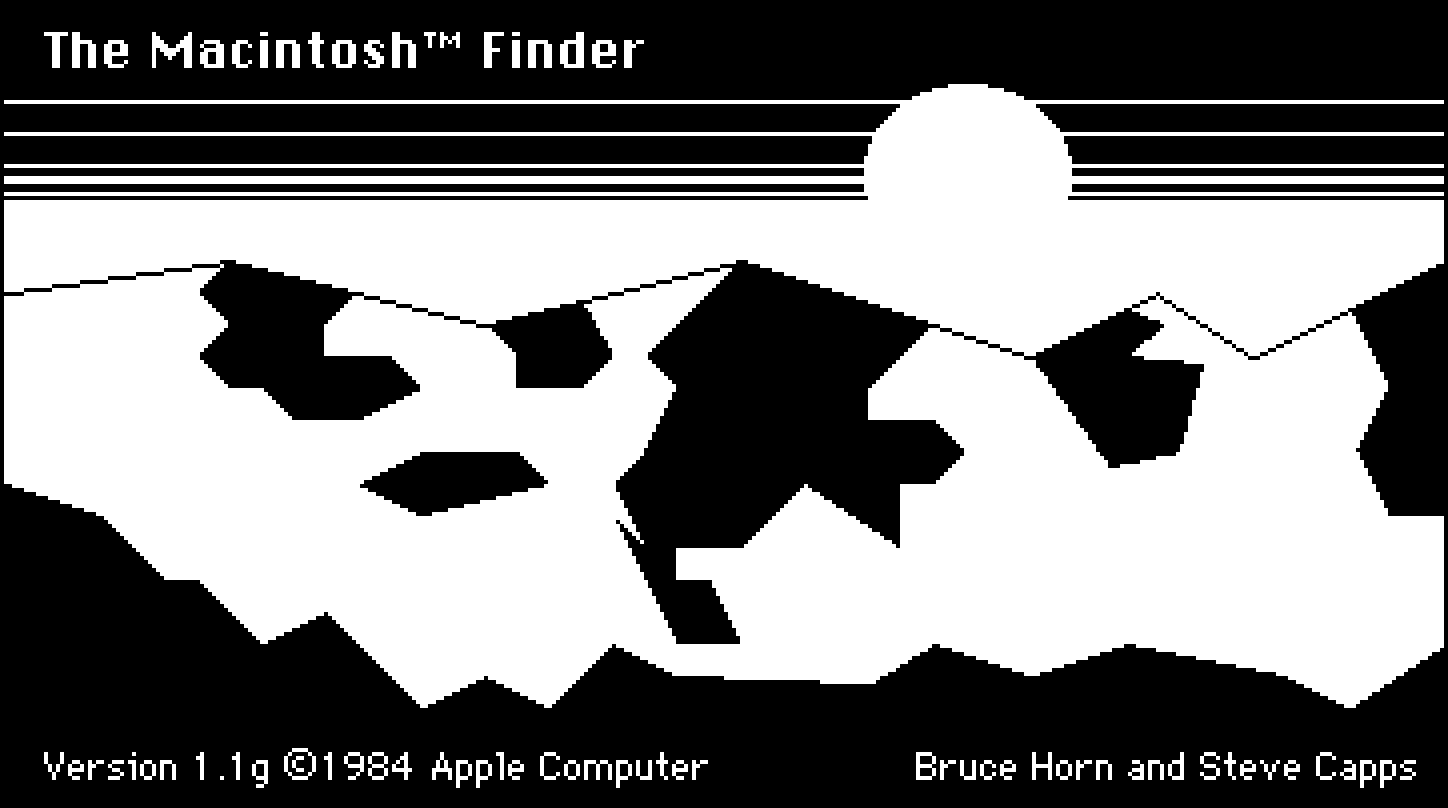

The more a user interface presents a world that is understandable to us, where we can flex our millennia-old kinesthetic skills, the better it feels. The Spatial Finder, which had a simple, direct relationship between each Finder window and a location in the file hierarchy, was a defining part of the classic Macintosh interface. Decades later, the iPhone launched with a similarly relentlessly spatial home-screen interface: a grid of icons, recognizable by their position and appearance, that go where we move them and stay where we put them.

Now here we are, 40 years after the original Macintosh, and Apple is introducing what it calls its first "spatial computer." I haven’t tried the Vision Pro yet (regular customers won’t receive theirs for at least another three days), but the early reviews and Apple’s own guided tour provide a good overview of its capabilities.

How does the Vision Pro stack up, spatially speaking? Is it the new definition of “direct manipulation,” wresting the title from touch interfaces? In one obvious way, it takes spatial interfaces to the next level by committing to the simulation of a 3D world in a much more thorough way than the Mac or iPhone. Traditional GUIs are often described as being “2D,” but they’ve all taken advantage of our ability to parse and understand objects in 3D space by layering interface elements on top of each other, often deploying visual cues like shadows to drive home the illusion.

Vision Pro’s commitment to the bit goes much further. It breaks the rigid perpendicularity and shallow overall depth of the layered windows in a traditional GUI to provide a much deeper (literally) world within which to do our work.

Where Vision Pro may stumble is in its interface to the deep, spatial world it provides. We all know how to reach out and “directly manipulate” objects in the real world, but that’s not what Vision Pro asks us to do. Instead, Vision Pro requires us to first look at the thing we want to manipulate, and then perform an “indirect” gesture with our hands to operate on it.

Is this look-then-gesture interaction any different than using a mouse to “indirectly” manipulate a pointer? Does it leverage our innate spatial abilities to the same extent? Time will tell. But I feel comfortable saying that, in some ways, this kind of Vision Pro interaction is less “direct” than the iPhone’s touch interface, where we see a thing on a screen and then literally place our fingers on it. Will there be any interaction on the Vision Pro that’s as intuitive, efficient, and satisfying as flick-scrolling on an iPhone screen? It’s a high bar to clear, that’s for sure.

As the Vision Pro finally starts to arrive in customers’ hands, I can’t help but view it through this spatial-interface lens when comparing it to the Mac and the iPhone. Both its predecessors took advantage of our abilities to recognize and manipulate objects in space to a greater extent than any of the computing platforms that came before them. In its current form, I’m not sure the same can be said of the Vision Pro.

Of course, there’s a lot more to the Vision Pro than the degree to which it taps into this specific set of human skills. Its ability to fill literally the entire space around the user with its interface is something the Mac and iPhone cannot match, and it opens the door to new experiences and new kinds of interfaces.

But I do wonder if the Vision Pro’s current interaction model will hold up as well as that of the Mac and iPhone. Perhaps there’s still at least one technological leap yet to come to round out the story. Or perhaps the tools of the past (e.g., physical keyboards and pointing devices) will end up being an essential part of a productive, efficient Vision Pro experience. No matter how it turns out, I’m happy to see that the decades-old journey of “spatial computing” continues.

Select the whole table, then click the “Border width” toolbar icon, then select

0pt. ↩